티스토리 뷰

24.05.31_TIL ( 팀 프로젝트 : AI NOST Django ) _ 7. 랭체인(LangChain), LLM 공부

티아(tia) 2024. 5. 31. 19:41

[ 세번째 프로젝트 ]

AI를 이용한 소설 사이트를 만들어 보자.

++ 팀 스로젝트로 팀과의 협업이 중요하다.

++ 장고 공식 문서는 항상 확인하기

https://docs.djangoproject.com/en/4.2/

++ 랭체인 공식 문서

https://www.langchain.com/

++ 리액트 공식문서

https://ko.legacy.reactjs.org/ # 한국어

https://ko.react.dev/

https://github.com/1489ehdghks/NOST.git

GitHub - 1489ehdghks/NOST

Contribute to 1489ehdghks/NOST development by creating an account on GitHub.

github.com

1. 랭체인의 개념을 알아보자.

https://youtu.be/BLM3KDaOTJM?si=fwwIF_BWvfaHgc2q

랭체인에 대한 기초지식과 기초 실습으로 이해해본다. (이 영상만이 아니라 이어서 다른 영상도 같이 시청할 수 있다.)

https://www.samsungsds.com/kr/insights/what-is-langchain.html

랭체인(LangChain)이란 무엇인가? | 인사이트리포트 | 삼성SDS

랭체인(LangChain)은 노출하여 대규모 언어 모델과 애플리케이션의 통합을 간소화하는 SDK입니다. 대규모 언어 모델의 부상과 함께 중요성이 커지고 있다. 대규모 언어 모델에 대한 최신 트렌드를

www.samsungsds.com

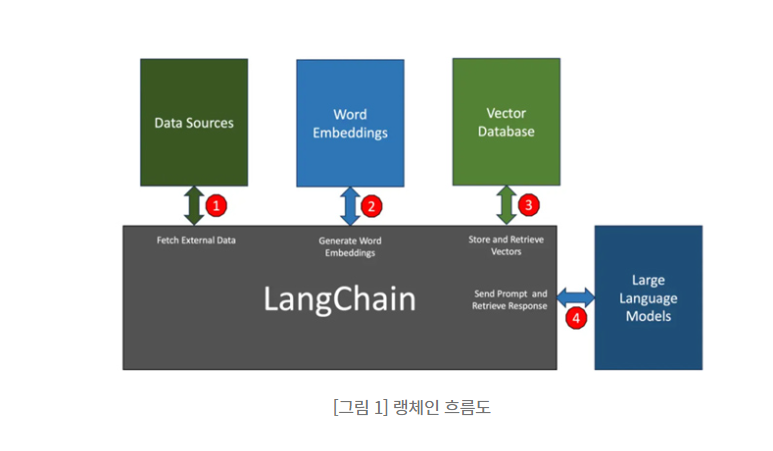

- 랭체인이란?

- 랭체인은 LLM과 애플리케이션의 통합을 간소화하도록 설계된 SDK로서 앞서 설명한 대부분의 문제를 해결한다.

- 대규모 언어 모델(LLM- Large Language Models)을 활용하여 애플리케이션과 파이프라인을 신속하게 구축할 수 있는 플랫폼의 필요성을 느끼고, 개발자들이 챗봇, 질의응답 시스템, 자동 요약 등 다양한 LLM 애플리케이션을 쉽게 개발할 수 있도록 지원하는 프레임워크를 만든것. (https://wikidocs.net/231151)

- LLM 이란?

- 대규모 언어 모델(LLM)은 다양한 자연어 처리(NLP) 작업을 수행할 수 있는 딥 러닝 알고리즘입니다.

- 인공 지능(AI) 애플리케이션에 인간 언어를 가르치는 것 외에도, 대규모 언어 모델은 단백질 구조 이해, 소프트웨어 코드 작성 등과 같은 다양한 작업을 수행하도록 훈련될 수 있습니다.

- https://www.elastic.co/kr/what-is/large-language-models에서 참조

++ 더 정리하기

회의 내용

1. 회의를 통해 랭체인 결정

장편소설을 목표로 했으나 일단 단편소설부터 완성하고 곁가지를 쳐서 더 나아가기로 결정함.

- 소설 스토리 짜는 AI

- 스토리를 짜고 나서 그 스토리로 챕터 1을 만드는 AI

- 챕터 1부터 챕터 4까지 일단 기승전결로만 만들기로 함

- 나중에는 챕터 1-1, 1-2 등으로 곁가지를 쳐나갈 예정

- 저장 DB도 보완할 예정

- 중간 저장을 하고 완결을 내는 것을 일단 나중으로 미루고 처음은 챕터 4까지 바로 선택해서 한번에 저장하는 것으로 함.

- 스토리를 짠 것으로 그림, 전체적인 소설의 배경이 될 그림을 만드는 AI

이렇게 3가지의 AI를 쓰기로 결정함.

2. 랭체인 구조 코드

from flask import Flask, request, jsonify

import os

import dotenv

from langchain.memory import ConversationSummaryBufferMemory

from langchain_openai import ChatOpenAI

from langchain.prompts import ChatPromptTemplate, MessagesPlaceholder

from langchain.schema.runnable import RunnablePassthrough

# 환경 변수 로드

dotenv_path = os.path.join(os.getcwd(), ".env")

dotenv.load_dotenv(dotenv_path)

# OpenAI 설정

llm_story = ChatOpenAI(model="gpt-3.5-turbo",

api_key=os.getenv("OPENAI_API_KEY"), max_tokens=800)

llm_character = ChatOpenAI(model="gpt-3.5-turbo",

api_key=os.getenv("OPENAI_API_KEY"), max_tokens=500)

llm_final = ChatOpenAI(model="gpt-3.5-turbo",

api_key=os.getenv("OPENAI_API_KEY"), max_tokens=1200)

# 메모리 설정

memory = ConversationSummaryBufferMemory(

llm=llm_final,

max_token_limit=1000,

memory_key="chat_history",

return_messages=True,

)

# Flask 애플리케이션 설정

app = Flask(__name__)

# 프롬프트 템플릿 설정

story_prompt_template = ChatPromptTemplate.from_messages(

[

("system", "Never give your thoughts and opinions as results.Execute an immersive simulation based on the instructions below.You makes the <user> an observer of the story.-You must become a novelist.There must be sufficient narrative about the past, present, and future, and the grammar and structure of the sentences must be perfect.-The cause of the conflict must be real and relatable. -Show your writing skills professional novelist. Create text Demonstrate expert-level sentence editing skills, Record the character's background, time, and changes in place.Please do it in Korean."),

MessagesPlaceholder(variable_name="chat_history"),

("human", "{theme}"),

]

)

character_template = ChatPromptTemplate.from_messages(

[

("system", "Follow the instructions below to run Immersive Character Creation.- You should be a character designer. Explore and observe everything on the spectrum of what your character can do besides the given behaviours.-Fill in the following information to make your character come alive: name, gender, age, height, weight, nationality, values, appearance, hairstyle, eyes, clothing, occupation, etc.But please answer in the language you received."),

MessagesPlaceholder(variable_name="chat_history"),

("human", "{story_prompt}"),

]

)

final_story_template = ChatPromptTemplate.from_messages(

[

("system", "You will be given the setting and characters for the story and must write the story following the instructions below.Execute an immersive simulation based on the instructions below.You makes the <user> an observer of the story. - You must become a novelist. There must be sufficient narrative about the past, present, and future, and the grammar and structure of the sentences must be perfect. - Show your writing skills professional novelist. Create text Demonstrate expert-level sentence editing skills, Record the character's background, time, and changes in place. - Proceed with writing considering that this is adult-only content that requires adult certification.You should definitely write a stimulating, creative, and engaging story.- Always describe <char>'s actions rich sentences, emotions (joy, anger, sadness, happy, etc) perfectly.Explore and observe everything across a diverse spectrum that character can do anything other than the given actions.But please answer in the language you received."),

MessagesPlaceholder(variable_name="chat_history"),

("human", "{final_prompt}"),

]

)

# 체인 설정

def load_memory(input):

return memory.load_memory_variables({})["chat_history"]

story_chain = RunnablePassthrough.assign(

chat_history=load_memory) | story_prompt_template | llm_story

character_chain = RunnablePassthrough.assign(

chat_history=load_memory) | character_template | llm_character

final_chain = RunnablePassthrough.assign(

chat_history=load_memory) | final_story_template | llm_final

@app.route('/generate_story_prompt', methods=['POST'])

def generate_story_prompt():

data = request.json

theme = data.get('theme')

if not theme:

return jsonify({"error": "No theme provided"}), 400

result = story_chain.invoke({"theme": theme, "language": "Korean"})

memory.save_context({"input": theme}, {"output": result.content})

return jsonify({"story_prompt": result.content})

@app.route('/generate_characters', methods=['POST'])

def generate_characters():

data = request.json

story_prompt = data.get('story_prompt')

if not story_prompt:

return jsonify({"error": "No story prompt provided"}), 400

result = character_chain.invoke(

{"story_prompt": story_prompt, "language": "Korean"})

memory.save_context({"input": story_prompt}, {"output": result.content})

return jsonify({"characters": result.content})

@app.route('/generate_final_story', methods=['POST'])

def generate_final_story():

data = request.json

story_prompt = data.get('story_prompt')

characters = data.get('characters')

if not story_prompt or not characters:

return jsonify({"error": "Missing story prompt or characters"}), 400

final_prompt = f"""

Subtitle: "A Divided Future: The Struggle of Classes"

Story Prompt: {story_prompt}

Character Status: {characters}

Write a detailed, immersive, and dramatic story based on the above information. Ensure that character dialogues are included and recommended story paths are provided.

"""

result = final_chain.invoke(

{"final_prompt": final_prompt, "language": "Korean"})

memory.save_context({"input": final_prompt}, {"output": result.content})

return jsonify({"final_story": result.content})

if __name__ == '__main__':

app.run(debug=True)플라스크로 먼저 구조화를 해준 팀장님의 코드를 바탕으로

부팀장님이 장고 코드로 변환시켜주셨담

from langchain.chat_models import ChatOpenAI

from langchain.prompts import ChatPromptTemplate

from langchain.callbacks import StreamingStdOutCallbackHandler

chat = ChatOpenAI(temperature=0.1, streaming=True,callbacks=[StreamingStdOutCallbackHandler()])

chef_prompt = ChatPromptTemplate.from_messages([

('system', 'You are a world-class international chef. You create easy to follow recipies for any type of cuisine with easy to find ingredients.'),

('human','I want to cook {cuisine} food.'),

])

chef_chain = chef_prompt | chat

veg_chef_prompt = ChatPromptTemplate.from_messages([

('system', "You are a vegetarian chef specialized on making traditional recipies vegetarian. You find alternative ingredients and explain their preparation. You don't radically modify the recipe. If ther is no alternative for a food just say you don't know how to replace it."),

('human', '{recipe}')

])

veg_chain = veg_chef_prompt | chat

fianl_chain = {'recipe': chef_chain} | veg_chain

fianl_chain.invoke({

'cuisine':'indian'

})

이제 여기서 스토리를 주고 세개의 내용으로 갈라지는 것을 확인하는 코드를 팀장님이 플라스크로 짜심.

사실 플라스크가 갑자기 이해가 안되서 GPT 로 이해하였다...

장고가 간단하게 줄여지니까 이래서 장고를 쓰나 싶다..

from flask import Flask, request, jsonify

import os

import dotenv

import logging

import re

from langchain.memory import ConversationSummaryBufferMemory

from langchain_openai import ChatOpenAI

from langchain.prompts import ChatPromptTemplate, MessagesPlaceholder

dotenv.load_dotenv(os.path.join(os.getcwd(), ".env"))

llm_story = ChatOpenAI(model="gpt-3.5-turbo",

api_key=os.getenv("OPENAI_API_KEY"), max_tokens=800)

llm_final = ChatOpenAI(model="gpt-3.5-turbo",

api_key=os.getenv("OPENAI_API_KEY"), max_tokens=1200)

# 메모리 세팅

memory = ConversationSummaryBufferMemory(

llm=llm_final, max_token_limit=20000, memory_key="chat_history", return_messages=True)

app = Flask(__name__)

# 템플릿 세팅

def create_prompt_templates():

story_prompt_template = ChatPromptTemplate.from_messages([

("system", "You are a novelist. Follow the instructions below to create an immersive simulation. Write a detailed story in Korean about the theme provided. Create a basic setting, environment, and characters. Don't go too deep into the setting; we will unravel it slowly later."),

("human", "{theme}")

])

final_story_template = ChatPromptTemplate.from_messages([

("system", "You are an experienced novelist. Write a concise, realistic, and engaging story based on the provided theme and previous context. Develop the characters, setting, and plot with rich descriptions. Ensure the story flows smoothly, highlighting both hope and despair. Make the narrative provocative and creative. Avoid explicit reader interaction prompts or suggested paths."),

MessagesPlaceholder(variable_name="chat_history"),

("human", "{final_prompt}")

])

recommendation_template = ChatPromptTemplate.from_messages([

("system", "Based on the current story prompt, provide three compelling recommendations for the next part of the story. Your recommendations should emphasize hopeful, tragically hopeless, and starkly realistic choices, respectively. Be extremely contextual and realistic with your recommendations. Each recommendation should have 'Title': 'Description'. For example: 'Title': 'The Beginning of a Tragedy','Description': 'The people are kind to the new doctor in town, but under the guise of healing their wounds, the doctor slowly conducts experiments.' The response format is exactly the same as the frames in the example."),

MessagesPlaceholder(variable_name="chat_history"),

("human", "{current_story}")

])

return story_prompt_template, final_story_template, recommendation_template

story_prompt_template, final_story_template, recommendation_template = create_prompt_templates()

def load_memory():

return memory.load_memory_variables({})["chat_history"]

def parse_recommendations(recommendation_text):

recommendations = []

try:

rec_lines = recommendation_text.split('\n')

title, description = None, None

for line in rec_lines:

if line.startswith("Title:"):

if title and description:

recommendations.append(

{"Title": title, "Description": description})

title = line.split("Title:", 1)[1].strip()

description = None

elif line.startswith("Description:"):

description = line.split("Description:", 1)[1].strip()

if title and description:

recommendations.append(

{"Title": title, "Description": description})

title, description = None, None

if len(recommendations) == 3:

break

except Exception as e:

logging.error(f"Error parsing recommendations: {e}")

return recommendations

def generate_recommendations(chat_history, current_story):

formatted_recommendation_prompt = recommendation_template.format(

chat_history=chat_history, current_story=current_story)

recommendation_result = llm_final.invoke(formatted_recommendation_prompt)

recommendations = parse_recommendations(recommendation_result.content)

return recommendations

def remove_recommendation_paths(final_story):

pattern = re.compile(r'Recommended story paths:.*$', re.DOTALL)

cleaned_story = re.sub(pattern, '', final_story).strip()

return cleaned_story

@app.route('/synopsis', methods=['POST'])

def generate_synopsis():

data = request.json

theme = data.get('theme')

if not theme:

return jsonify({"error": "No theme provided"}), 400

formatted_story_prompt = story_prompt_template.format(theme=theme)

result = llm_story.invoke(formatted_story_prompt)

return jsonify({"story_prompt": result.content})

@app.route('/summary', methods=['POST'])

def generate_summary():

data = request.json

story_prompt = data.get('story_prompt')

if not story_prompt:

return jsonify({"error": "Missing story prompt"}), 400

chat_history = load_memory()

final_prompt = f"""

Story Prompt: {story_prompt}

Previous Story: {chat_history}

Write a concise, realistic, and engaging story based on the above information. Highlight both hope and despair in the narrative. Make it provocative and creative.

"""

formatted_final_prompt = final_story_template.format(

chat_history=chat_history, final_prompt=final_prompt)

result = llm_final.invoke(formatted_final_prompt)

memory.save_context({"input": final_prompt}, {"output": result.content})

cleaned_story = remove_recommendation_paths(result.content)

recommendations = generate_recommendations(chat_history, result.content)

return jsonify({"final_story": cleaned_story, "recommendations": recommendations})

if __name__ == '__main__':

app.run(debug=True)